Speech Recognition: A Pub-level Overview

Posted on Mon 28 November 2022 in Blog

Introduction

I've been puzzled trying to think of a subject for the first blog post, but luckily an idea eventually struck. Whenever I'm asked what I do in my new job and say that I work in automatic speech recognition (or ASR for short), I can see their eyes glaze over. Typically I try to relate it to speech recognition technologies they've heard of, mentioning prominent voice assistants like Alexa and Siri or describing transcription software. While they are typically knowledgable of such tech and the conversation typically ends there, a few people have asked exactly what that means or what I do.

This is a harder question to answer off-the-cuff and really requires a more technical explanation than what people can be bothered listening to. However, it's a valuable question to know how to answer, and so I thought it would be worth trying to outline my best non-technical explanation in a short blog post that I can refer to.

Speech recognition is the translation of speech-to-text, and more broadly audio-to-text. Both audio processing and speech are complex, so it only makes sense that trying to solve this problem is intensely complicated.

So where do we start?

Raw Audio to Features

Speech for ASR is captured in an audio signal, which is itself just a sequence of digital values that best describe the analogue speech waveform. This sequence will typically have between 16000 and 48000 values (or samples) per second for a typical audio file. This is a large amount of information to deal with when thinking of it in data terms, and so the first step of speech recognition is typically extracting relevant features from the audio file. This sounds more complicated than it is: all we are doing is trying to cut down these huge audio sequences into a more manageable size, without losing the parts of the file that are relevant for us to parse human speech.

Without getting bogged down in technical details, a good way of doing this is through Mel-Frequency Cepstral Coefficients (or MFCCs for short). This is a way of representing audio that better represents the human auditory system, and thanks to the fact that speech has evolved specifically to be heard by the human ear, it turns out that MFCC's are a really good way of representing speech sounds too. However, this raises a relevant question: what is this mythical 'speech sound' we are looking for?

The answer to this lies in linguistics: I am not a linguist, so will keep this part short before I embarrass myself. Words are made up of distinct small building blocks of sound called phones - as an example, the word 'cat' can be thought of as three distinct phones ('k', 'ae', 't'), while the word 'bat' can be thought of as sharing all but one of these phones ('b', 'ae', 't'). As these are the core differentiator in words, if we can accurately tell what phone is being made at any given point in the sequence of MFCCs, we can (hopefully) start telling apart words.

Knowing all this, we want to convert the sequence of audio samples into a sequence of MFCCs that better represent these blocks of speech. We can choose how large a time-period these MFCCs cover - a relatively standard length is ~20ms. These become our 'features'.

Turning Features into an Acoustic Model

At this point, we have converted our huge sequence of audio samples into a more managable sequence of 20ms speech features. However, this is the tip of the iceberg. Let's say we have an input file of audio we are wanting to transcribe, and we convert it as above into a sequence of MFCCs and take the first one. We need to have a system that will determine what speech sound is 'most alike' to the MFCC in the input: this is where we need to determine the statistically most likely speech sound is given the input feature. This is called the acoustic model, and is a very important component in decoding speech. I won't go into detail here about Hidden Markov Models and the statistical methods for doing so, but in a grand scale it involves comparing with previous examples of the speech sound in question and spitting out a probability for each.

The desired output of this system tells us the most likely speech sound for every 20ms feature, giving us a sequence of the most likely speech sound in each 20ms frame of audio input! This brings us closer and closer to decoding the file into speech.

Above the Acoustic Model - The Lexicon

We are missing some key information at this point: we have no idea what specific speech sounds make up words! This is where something called a lexicon is needed - this can be thought of as a database for a language where it displays every valid word that can be transcribed, alongside the possible combinations of speech sounds that make up that word. This can help account for accents too - think of the word tomato being pronounced as either 'tomayto' or 'tomahto'. One can build that into a lexicon so that both pronounciations would be accepted and transcribed as the written word tomato.

And Above the Lexicon - The Language Model

At this point you may be thinking we must have everything that we need to transcribe speech from an audio input. We have a sequence of the most likely speech sounds, and we have a way to assemble those into words. However, if a speech recognition system just used the acoustic model and didn't care about the actual sentences it was producing, it would be terrible.

If you take your phone and ask Siri or Alexa the nonsense phrase 'Blay me a song', it will automatically assume you meant to say 'Play me a song', no matter how hard you try to pronounce the 'B' sound at the start of 'Blay'. The reason for this is something called the Language Model - this is a component of the overall speech system that tries to produce reasonable English sentences. It looks at a wider window of the speech sounds and considers what the most likely sequence of words is given the words around it. The human brain will do this automatically: if you're in a bar and someone asks 'What would you like to dr[UNINTELLIGIBLE]?', you can make a fair assumption that they're asking for a drink order and not what you would like to drive, for instance. The language model uses the same context to try and decide what a reasonable transcription would be.

Bringing It All Together

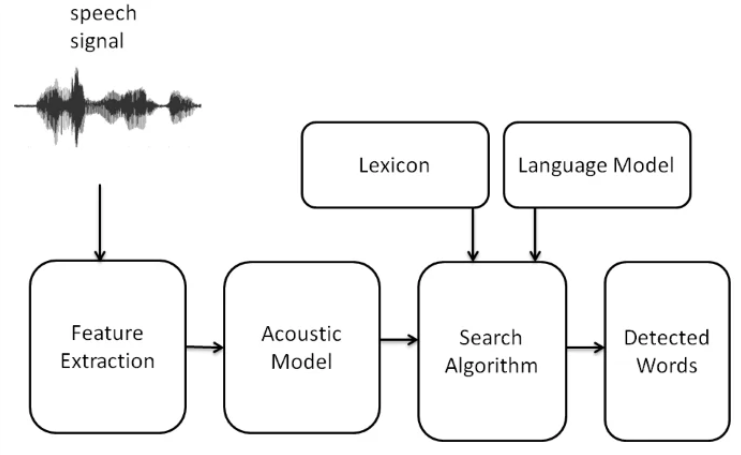

So at this point, we have a dense and complex system that balances up the most likely speech sounds detected, the most likely words they can make up and the likelihood of the overall utterance. The final system will balance up these likelihoods with different weights assigned to each to decide the most likely transcription given the audio input. This part is actually one of the hardest problems to solve, as it requires picking a suitable search algorithm that can quickly and accurately figure out what the most likely sequence of words is at every time step of audio input. This is slightly too in-depth for the purposes of this post, and so we can safely assume that an algorithm is used that sorts this out for us.

This is the basis of traditional speech recognition: even some modern systems still use a framework similar to this. However, a huge amount of ASR has been replaced by machine learning, deep learning and AI which replaces and transforms a lot of these 'traditional' steps. This type of detail is best saved for another blog post.

After reading this post, I hope you have a slightly better idea of what goes into speech recognition and haven't been overwhelmed. I have attached a diagram that displays the speech recognition pipeline in full below (provided from the attached link) and it makes a little more sense than it would have done at the start!

Note From Author

I have left out far more detail than I have included: speech recognition is an entire field of industry and research and it's impossible to summarize in a <1500 word blog post from a junior in the field. It is a highly technical subject rooted in mathematics, statistics and linguistics. If the content of the post has interested you at all, there are fantastic other resources that can be accessed: I personally think the Wikipedia page for speech recognition is a surprisingly good place for an overview for an unfamiliar reader. For more rigour, Jurafsky and Martin's 'Speech and Language Processing' can be thought of as a bible for not only speech recognition, but all fields of speech and text processing.