Upskilling Project: Toxicity Classifier

Posted on Wed 28 February 2024 in Projects

Introduction

The motivation behind this project was to fully complete a machine learning model build from scratch to make sure I have a nice, well-formatted example somewhere of my coding capabilities, and the ability to see through a project. Before beginning, my Github repo consisted entirely of half-finished or barely-started projects, or some code from my Masters dissertation project that makes me cringe when I look back at it.

After watching a Youtube video, I was reading through the comments and noticed an incredibly rude message relating to the creator's accent. It had been widely downvoted (which may be part of how Youtube itself filters out toxic comments as it hadn't been surfaced to the top of the comment list), but it got me thinking about how there must be a way of automatically flagging toxic comments at time of writing based on the language used or sentiments expressed.

This struck me as a good ML problem to look at: having a way to automatically flag text as toxic. Therefore, this linked Git repo was created to work on a solution.

Data

I began with looking for an appropriate dataset. The problem could either be framed as a regression problem (ie. how toxic is the comment?) or as a classification problem (ie. is the comment toxic?). After some searching, a regression approach seemed unlikely as most publically available datasets didn't give ratings of toxicity. One notable dataset on Kaggle gave 5 different areas (ie. toxic, obscene, threat, insult) a human-rated value, that models needed to try to predict. While this sounded interesting, I wanted the project repo to be something that someone could just run and it would download the dataset for them: the Kaggle repo requires log-in to Kaggle which adds some trickiness.

Therefore, I instead settled on this dataset from HF Datasets which uses the same core dataset (a selection of Wikipedia comments) that have been individually labelled as either toxic or non-toxic (framing the problem as a classification problem). That means that the model we eventually build is going to be estimating whether a comment is toxic or not, as opposed to giving a toxicity rating.

Data Prep

Exploring our dataset

The dataset is imbalanced (which hopefully reflects the real distribution of toxicity on typical internet comments). It contains ~200k non-toxic comments and ~22.5k toxic comments, so a roughly 1:10 imbalance between the categories. The HF dataset contains a 'balanced' training set, which automatically splits the data distribution appropriately: the training data is split to be 50/50 toxic/non-toxic, while the validation and test set mirror the real distribution of data (~1:10).

| Dataset Split | Non-Toxic Count | Toxic Count |

|---|---|---|

| Training | 12934 | 12934 |

| Validation | 28624 | 3291 |

| Test | 57735 | 6243 |

From inspection, the dataset seemed to contain several examples that were unusually long: while this wouldn't necessarily cause a huge problem, it may impact overall performance during training when comments are padded to the maximum size. Therefore, it was decided to have a hard limit on how long in words the sentences could be (250 by default). This hard limit only cuts ~4k comments from the overall count and sped up training significantly.

From raw text to features

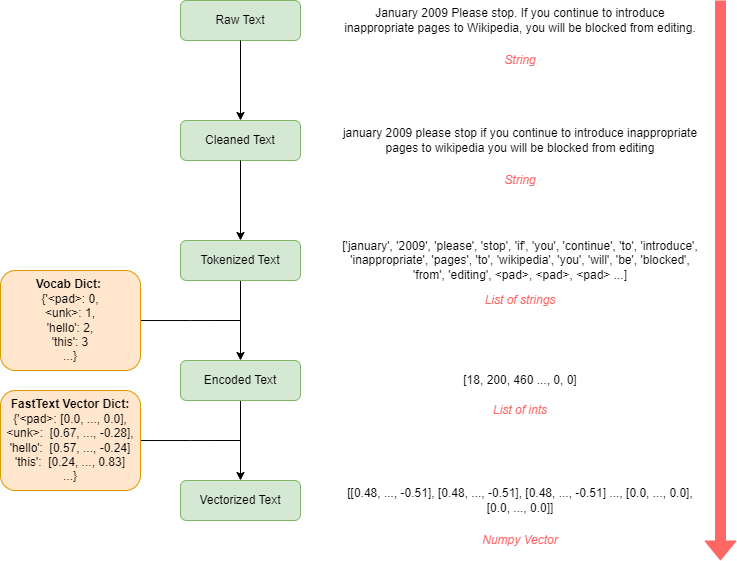

A standard encoding pipeline was followed for the dataset to prepare it for our classifier. Due to my prior positive experience with using them in a different project, FastText vectors were used as our main word embedding source.

The general steps I followed were:

- build a vocabulary dictionary from all text in the dataset (word -> idx)

- download FastText vectors and map between the vocabulary dictionary and the embeddings (idx -> embedding)

- if no FastText pre-trained embedding for word, create random embedding for it

- encode all sentences in the dataset using the word2idx -> idx2embedding pipeline

|

|---|

| Text-to-feature Pipeline |

This gives us our encoded sentences we can pass to the model in training as ('label', 'embedding') pairs. Here, the embeddings have the size of [MaximumSentenceLength, EmbeddingSize], or [250, 300] if default settings used. In training, these are batched, so they would really have the size of [BatchSize, MaximumSentenceLength, EmbeddingSize] when passed to the model, or [50, 250, 300] by default.

Model Structure

It was decided to use a CNN architecture for our classifier, which is typically pretty good at things like spam detection or simpler classification tasks. They are best at at learning and capturing local patterns/trends in the input data. It is worth noting that they aren't great at long-range learning (ie. learning patterns in text that span over a large sequence of words) in the way that a RNN or a transformer architecture would be.

From my intuition, this problem is well-suited for a CNN as: - toxic language is typically located in small bursts, which the CNN filters should be able to learn - the variable length of comments in the dataset isn't an issue, as the CNN architecture is robust to different sequence lengths - most comments aren't that long, so there isn't really a need for more complex sequence processing

It also has the benefit of being small and efficient to train, with less parameters than RNNs or certainly transformer architectures - this is useful as it makes it feasible for someone to download the repo and train the model themselves without needing a large amount of compute.

I began with a small and simple 1D CNN architecture to begin with: the set-up code for that is highlighted below.

class Simple_CNN(nn.Module):

""" Simple 1D CNN Classifier. """

def __init__(self,

pretrained_embedding=None,

filter_sizes=[3, 4, 5],

num_filters=[100, 100, 100],

num_classes=2,

dropout=0.5):

super(Simple_CNN, self).__init__()

self.vocab_size, self.embed_dim = pretrained_embedding.shape

self.embedding = nn.Embedding.from_pretrained(pretrained_embedding,

freeze=True)

# set up convolution network

self.conv1d_list = nn.ModuleList([

nn.Conv1d(in_channels=self.embed_dim,

out_channels=num_filters[i],

kernel_size=filter_sizes[i])

for i in range(len(filter_sizes))

])

# connected layer and dropout

self.fc = nn.Linear(np.sum(num_filters), num_classes)

self.dropout = nn.Dropout(p=dropout)

Training

Initial Training

The models initial training parameters were estimated at sensible values:

- batch size: 50 examples per batch

- training epochs: 20

- CNN filter sizes: 3, 4, 5

- CNN number of filters: [100, 100, 100]

- CNN dropout: 0.5

- Learning Rate: 0.01

After training with these settings, I checked the results.

| Epoch | Train Loss | Validation Loss | Validation Accuracy | Test Accuracy |

|---|---|---|---|---|

| 1 | 0.674 | 0.651 | 87.24 | 82.97 |

| 2 | 0.623 | 0.595 | 88.95 | 83.76 |

| 3 | 0.551 | 0.532 | 89.19 | 82.70 |

| 4 | 0.472 | 0.441 | 91.28 | 84.49 |

| 5 | 0.402 | 0.374 | 91.86 | 84.73 |

| 6 | 0.3524 | 0.3168 | 92.5894 | 85.3826 |

| 7 | 0.318 | 0.302 | 92.00 | 84.16 |

| 8 | 0.292 | 0.272 | 92.34 | 84.50 |

| 9 | 0.276 | 0.258 | 92.28 | 84.31 |

| 10 | 0.263 | 0.261 | 91.61 | 83.50 |

| 11 | 0.255 | 0.253 | 91.66 | 83.49 |

| 12 | 0.247 | 0.236 | 92.17 | 84.10 |

| 13 | 0.240 | 0.224 | 92.53 | 84.50 |

| 14 | 0.238 | 0.236 | 91.87 | 83.70 |

| 15 | 0.234 | 0.225 | 92.30 | 84.14 |

| 16 | 0.232 | 0.231 | 91.88 | 83.70 |

| 17 | 0.227 | 0.225 | 92.11 | 83.93 |

| 18 | 0.224 | 0.223 | 92.12 | 83.93 |

| 19 | 0.219 | 0.222 | 92.10 | 83.90 |

| 20 | 0.218 | 0.217 | 92.35 | 84.09 |

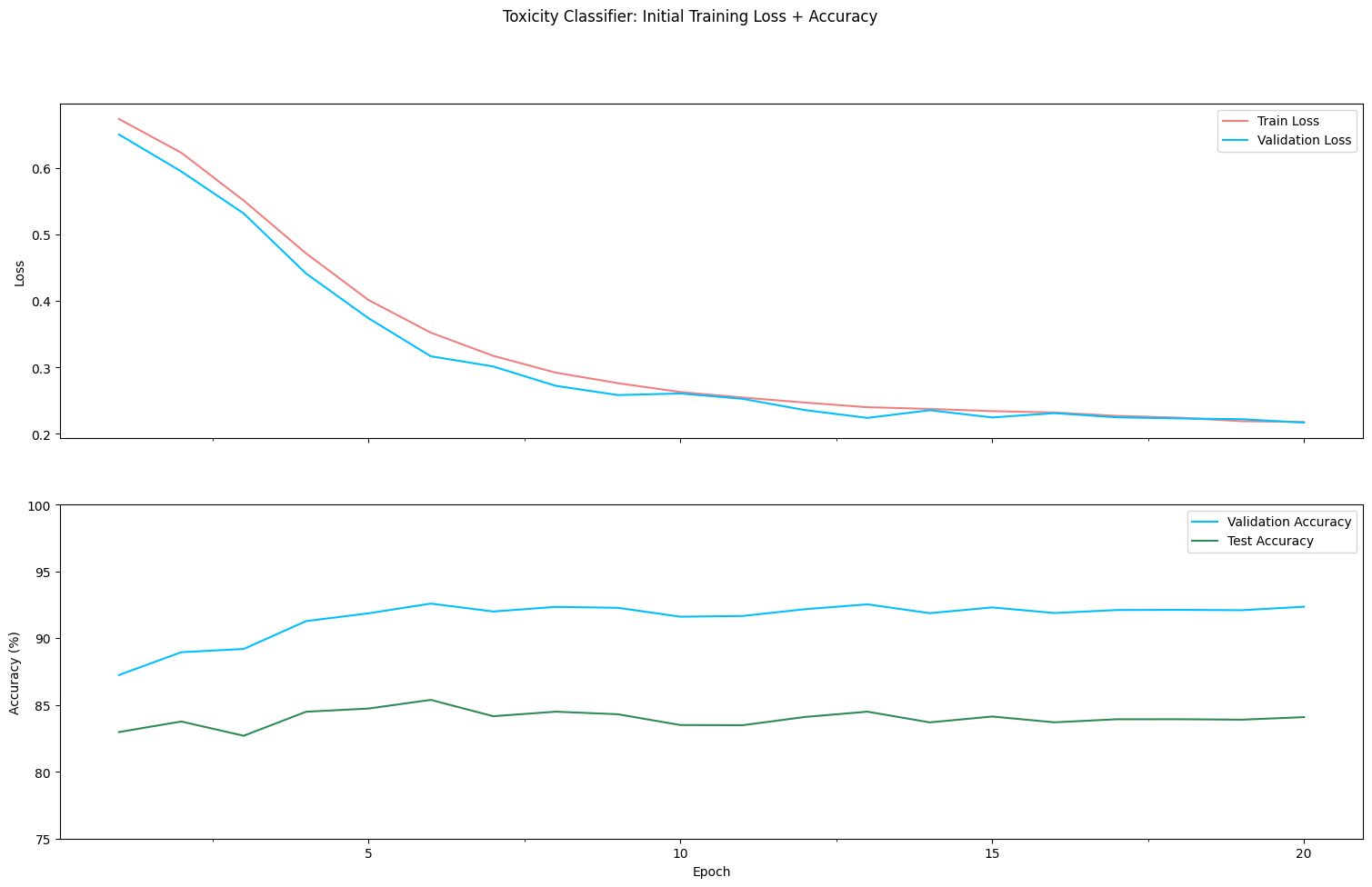

One can see that the best performing model on our unseen test set is the model at epoch 6, with both the validation accuracy and the test accuracy topping out then at ~92% and ~85% respectively. As the training loss does continue to decrease after epoch 6, one could guess it starts overfitting and losing it's ability to generalise well after that point. This plateauing behaviour can be noticed when examining the respective training graphs.

|

|---|

| Initial Training Performance |

The pattern of the results indicates (to me anyway) that this solution could be tuned further: the model is admittedly very simple at this point, and so is the data prep that we use. This is something I may revisit at a point in the future (or in another blog post), as I suspect a few changes could maybe help:

- Trying different types of embeddings - the whole power of this model is coming from the embeddings at this point, and there are other vectors we could easily sub in.

- Doing some more involved data cleaning - as the dataset is composed from comments from the internet, there are a lot of misspelled and nonsense words in the dataset, that therefore do not have embeddings and are instantiated with a random embedding. Some text-spelling correction using some kind of edit-distance algorithm could help reduce the prevalence of these and get more out of our embeddings.

- Subword embeddings - using subword embeddings could further help account for misspellings and out-of-vocab words

- Model changes - using a larger CNN or even attempting a different architecture entirely could be worth looking into, though I suspect marginal improvements here compared to better feature engineering